The amount of data generated, copied, consumed, and captured globally reached 149 zettabytes in 2024. For enterprises to store, process, and analyze this data, Big Data Technologies are required. The great news is: several reliable big data technologies are available to choose from in 2025.

But, before making any choices, make time to understand their features, pros & cons, etc. Knowing this information helps you to pick the best type, which:

This article will unveil some of the leading technologies for big data one can use in 2025. Also, a detailed analysis of the benefits of the different big data technologies & techniques will be brought to light.

Put simply, big data is a composite of unstructured, structured, and semistructured data that companies collect to mine for information. The structured data comes in a fixed format. Semi-structured data is like structured data but does not conform with data models of databases. Over 80% of data accumulated by enterprises today is either unstructured or semi-structured.

Forward-thinking businesses are using some of the latest Big Data technologies and applications mentioned to spur growth. These apps facilitate the analysis of colossal amounts of real-time data. The analyses help to minimize the chances of failure of the business through predictive modeling & several other sophisticated analytics. After learning what big data technology is, you may also need to know cloud based big data technologies. Fundamentally, they are the on-demand computer system resources, particularly for secure data storage and processing. Usually, the technologies operate without interference by the user.

To effectively manage these technologies, it’s crucial to have a solid understanding of cloud security principles. You can enhance your knowledge through resources like Certification Courses.

The big data implementation examples include the following:

In 2025, there will be more computing innovations that use data, such as Machine Learning (ML), advanced analytics, and Artificial Intelligence. The storage of big data will require and spur innovations in hybrid cloud, cloud and data lakes technology, etc. Furthermore, this industry will see advances in big data processing technologies, which will give rise to edge computing. These big data innovations will continue to grow, even beyond this year.

Technologies used in big data can be placed in two main classes, which are Operational and Analytical Big Data Technologies.

This technology offers operational features for managing real-time, interactive workloads. The collected data is raw and can be fed to Analytical Big Data Technologies for further analyses.

Operational Big Data examples include:

Analytical Big Data Technologies are more sophisticated compared to Operational Big Data Technologies. They are the platforms where the performance of a business is envisaged.

A few examples of Analytical Big Data are as follows:

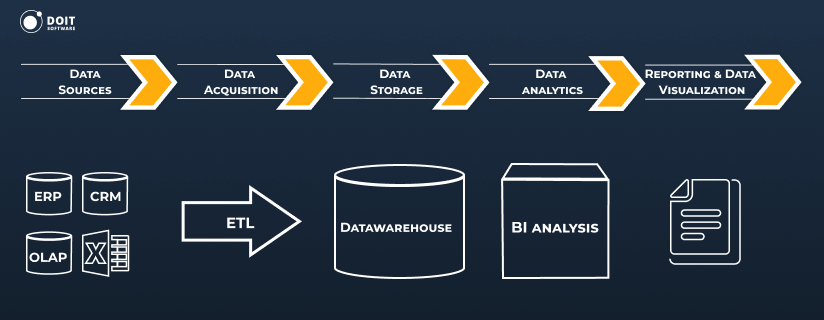

There are four fields through which all emerging big data technologies can be divided, and these are Data Mining, Storage, Analytics, and Visualization. Each of these big data methods comes with independent capabilities. You need to know each of these categories since they come with tools that accommodate only specific types of businesses. Before implementing any of the methods, make a big data tools comparison to choose the ones that work best for your business.

This type of big data technology comprises infrastructure designed to fetch, store, and manage big data. Data is organized in a manner that allows easy access, usage, and processing by various programs. Cloud enablement services have revolutionized the big data industry by providing scalable and flexible infrastructure for storing and analyzing massive amounts of data.

Some of the top big data tools for this type of work include:

The Hadoop technology stack comes with a framework for distributed storage & big data processing using the MapReduce programming model. Some of the perks of this Apache big data stack include its ability to quickly manage distributed data (store and process) at reasonable costs. Big data technologies like Hadoop are scalable, resilient to failure, and very flexible. The downsides of Hadoop include poor security, low performance with fewer big data files, & the ability to support batch processing only, etc. You can use Spark tools as an alternative to the Hadoop technology stack.

MongoDB is one of the big data database technologies – a NoSQL program that uses documents similar to JSON. It provides an alternative schema to that of Relational Databases. This enables it to handle several data types that come in vast amounts across Distributed Architectures. The main advantages of MongoDB are flexibility and scalability. This is because it is easy to utilize replica sets on this big data database. On the other hand, MongoDB has the weakness of low speed and increased data size.

This is a Database Management System software that handles big data for businesses. It can eliminate duplicate files as it sorts out and stores huge volumes of information for reference. The latest version of this software can handle big data sets with high ingest rates. Also, it comes with multi-tenancy features and supports cloud storage. Rainstor can shrink large data for storage in the cloud. A terabyte can be reduced to 25GB. Other perks of Rainstor include lower costs.

Data mining is the extraction of valuable information from raw data. Usually, this data is in large volumes, with high variability and streaming at tremendous velocity (conditions that make extraction without a unique technology impossible). Instead of using only a SQL text editor, check out some of the top big data technologies used for data mining.

Presto is a distributed SQL query engine that is used for operating Analytic queries against a variety of data sources, e.g., Cassandra, Hadoop, MySQL, and MongoDB. One of the strengths of Presto is that it allows users to query data from several sources through one query. In addition to that, Presto:

However, on the downside, if a fault occurs with a worker node in Presto, that query automatically fails; there is no caching layer in Presto, so you won’t get good results whenever you have “hot” queries.

This is a centralized software package for mining data and running Predictive Analytics. Users can enter large volumes of raw data, e.g., databases & text, for instant & intelligent analyses. Additionally, Rapidminer allows for sophisticated workflows, with support scripted in many languages.

Some of the notable strengths of Rapidminer include user-friendliness and affordability. Regarding weaknesses, you need to know that sharing analyses from the Rapidminer studio is difficult. Also, Rapidminer is not very convenient for Business Analytics Dashboards.

Elasticsearch is a full-text search & analytics engine that allows users to store, search & analyze massive data volumes in near-real-time. It is used as the primary engine that controls a variety of apps with sophisticated features & requirements.

Some of the advantages of Elasticsearch include fast search and the ability to filter large datasets. Also, Elasticsearch allows for customizable analytics & reports through its dynamic aggregation engine. On the downside, Elasticsearch has a complicated ingest pipeline structure.

Big Data analytics involves the cleaning, transformation and modeling of data, for the discovery of information useful for making decisions. The information obtained from the big data analytics tools includes correlations, hidden patterns, customer preferences, and market trends. A variety of sophisticated applications with elements such as statistical algorithms, predictive models, etc. are often used. Especially it’s relevant to analytics for SaaS. Below are some of the data analysis technologies you should know.

Kafka is a Distributed Streaming platform with Key Capabilities, which are consumer, publisher, and subscriber related. Kafka is open-source software that provides a pooled, low-latency, high-throughput platform for managing all data fed in real-time. The other benefit of this platform is its ability to scale horizontally. The only weakness with Kafka is the absence of good monitoring solutions.

Splunk is software that allows you to uncover the hidden value of data. It indexes and correlates real-time data via a searchable repository. It is from the repository that it creates reports, graphs, dashboards, alerts, and visualizations. Splunk can also be used for managing apps, improving security, and for business & web analytics. Some of the benefits of Splunk include:

The only downside of Splunk is that it can be difficult to learn for new users.

KNIME enables users to form visual data flows, implement some of the steps they created, and view results. This enhances a better understanding of data, data science workflows & recyclable components. The perks of using KNIME include its ability to connect to various data sources, provision of control over what happens with data at every stage, etc. KNIME also has many functionalities that can be reused. These functionalities are components verified by KNIME experts. Users can reuse them as their personalized KNIME nodes for tasks that often repeat.

The downside of KNIME is that simple tasks can take a long time, and there are usually problems with data imports & merging files.

Big Data visualization uses powerful computers to ingest raw data derived from corporations. This data is processed to form graphic illustrations that enable people to comprehend large amounts of information in seconds. Below are some top technologies you can use for data visualization.

Data visualization in Google Sheets allows you to create charts and graphs from your spreadsheet data. This can be a great way to see trends, patterns, and relationships in your data. Google Sheets provides various chart types, including bar charts, line charts, pie charts, and more. You can also customize them to suit your specific requirements.

Tableau eliminates the need for an advanced scholarship of query languages to understand big data. The platform readily provides a clean visual interface for that purpose. The data visuals formed come in the form of worksheets and dashboards.

In the Business Intelligence Industry, Tableau is often preferred for its high speed of data analysis. Also, its visual and designing capabilities surpass many data visualization technologies. However, there are also a few limitations to point out. Tableau comes with a high cost, security problems, inflexible pricing, poor customer support, etc.

Plotly is a Python library that is used for interactive big data visualizations. It enables users to create superior graphs much faster and efficiently.

Plotly is renowned for many benefits, including user-friendliness, scalability, lower costs, cutting-edge analytics, and ease of customization. On the downside, Plotly doesn’t have significant community support compared to other data visualization platforms such as Tableau. Plus, there is not a perfect means of putting its visualizations into a presentation.

Several big data types have been mentioned above, along with some of the best tools that can be used for mining, analysis, storage, and visualization. They are all developed by a variety of big data companies. As mentioned before, it’s important to research further before picking any specific big data tool or technique. Each of them is unique and can be applied to specific businesses.

Big data has improved the way people organize, analyze, and leverage information in all industries. Here is what you should know.

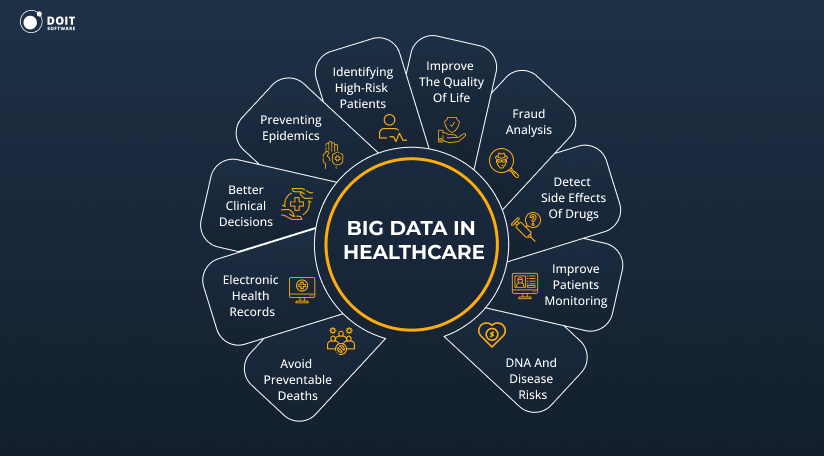

Big data technologies in healthcare process and analyze large amounts of information generated through digital technologies. The information includes patient records, and other essential details, usually too intricate for traditional technologies. The information acquired can assist in mitigating potential disease outbreaks, cure diseases, minimize costs, etc. Other uses of big data may include:

Also, in the realm of healthcare, big data’s transformative impact can be observed through technologies like the Electronic Medication Administration Record system. An eMAR system optimizes patient care by efficiently managing electronic medication administration records software, reducing errors and improving overall patient safety. Such advancements illustrate how healthcare providers can leverage data to enhance patient outcomes and streamline critical processes.

Problems fixed by big data in the healthcare industry

Big data has been instrumental in enabling healthcare providers to share anonymous patient data. Medical practitioners can learn more about how certain health issues can be tackled deriving from big data. This minimizes the chances of errors that result in longer and burdensome treatments or the unnecessary death of patients.

Another plausible thing about big data is that it has pushed healthcare providers to be accountable. Information regarding their performance can now be captured easily, and they are paid based on their patient experiences.

Other examples of big data applications in the healthcare industry

In the healthcare sector, big data can be applied in:

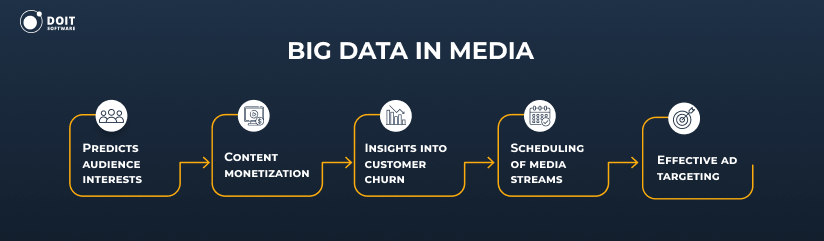

In the media and entertainment sector, big data helps publishers and advertisers to analyze and identify the content and type of ads that consumers prefer. Additionally, big data enables them to pick the best times and duration of streaming their media. Through this way, the CTR’s & conversions are boosted. Other uses of big data in media include keeping consumers engaged.

Problems solved by big data in media

Big data has been instrumental in managing media. By facilitating targeted advertising, big data minimizes overspending in marketing campaigns. Business owners no longer waste resources on the wrong audience. Additionally, big data allows marketers to accurately analyze the return on investment for campaigns. They no longer advertise blindly.

In the insurance sector, big data helps brokers to analyze trends & patterns. This helps to assay risks, spot fraud, and introduce business-friendly policies. In the management of claims, big data makes it easier to assess the damage and automate claims. If there are any anomalies, they can be picked up easily. Other uses of big data in insurance include:

For example, with the help of advanced analytics, trucking companies can better predict and mitigate the risks associated with transporting goods. Big data technologies enable these companies to collect and analyze vast amounts of data from various sources, such as traffic patterns, weather conditions, and historical accident data. This allows them to make more informed decisions about their trucking cargo insurance coverage, resulting in greater efficiency, improved safety, and reduced costs.

Problems that big data solves in the insurance business

Fraud is one of the major problems that concern insurance companies. Big data reduces this problem significantly by simplifying even the most complex cases. Also, business managers can quickly identify and evaluate prospective clients with high risk. Once identified, big data removes them to lower risks. This allows legitimate policyholders to pay lower premiums.

With the vast amount of data generated by insurance companies, there is a need for a secure and centralized location, like a dataroom, to store and manage information related to policies, claims, and customer data.

Advanced data technologies adds value to IoT by monitoring, collecting, and analyzing information being generated and exchanged between industrial devices. This makes data flow less rigid than it was previously. IoT development also needs big data to store and process huge volumes of data in real-time to produce reports showing:

Check out our article “Data Engineer Job Description: Junior to Senior Templates“

Problems solved by big data in the IoT industry

Leveraging IoT product engineering can enhance real-time data processing and insights.

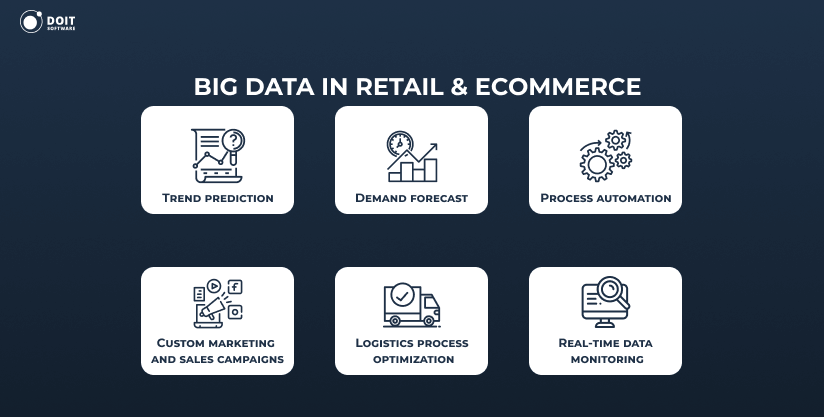

Big data can be used in retail and e-commerce businesses to understand demand in the market. Managers can also send emails announcing customized discounts and promotions to retain old customers. Additionally, big data allows retailers to give personalized ordering recommendations. Other uses of big data in this business are:

Problems that big data solves in the retail/ e-commerce industry

Commonly, retail businesses have challenges of not fully understanding their customer behavior and preferences. Big data allows them to have a much better understanding of their customers. This enables enterprises to scale predictably. Managers can use metrics dashboards instead of their gut preferences to make critical decisions.

The financial services and banking industry generates large volumes of data. This is because every digital footprint made is backed by data. A data analytics consulting company can assist in managing and processing all this information. It enables fintech companies to run, e.g., the time-consuming and costly task of credit risk scoring much quicker and cost-effectively. Also, big data allows fintech companies to store data, acquire business insights, and enhance growth. Other benefits of big data include:

Big data technologies are continually evolving, offering robust solutions for processing massive datasets. While these tools provide actionable insights for numerous applications, using the right components like enhanced spreadsheet functionalities add immense value to data workflows. JavaScript Spreadsheet Components are critical in managing and visualizing complex financial models seamlessly within big data frameworks, elevating their potential in industries such as finance and logistics.

Problems that big data improves in the fintech industry

Fraud is the biggest challenge in the banking and fintech industry, making know your business procedures essential for detecting and preventing fraudulent entities. To minimize fraud, big data enables customer behavior to be modeled. This model behavior closely compares to the actual conduct of the client. If any suspicious actions are detected, the system will alert the customer, highlighting potential risks and ensuring swift action. Incorporating advanced leading software for banks further enhances fraud detection capabilities, providing robust protection against fraudulent activities and bolstering the security of financial transactions.

To make data work for you, you need to hire data engineers. These engineers are in charge of collecting and managing the big data infrastructure & tools of an organization. They also have the knowledge of how to quickly obtain results. Here is what you should consider when hiring a big data engineer.

The skills should stem from Software & Technology and Operational skills. Below is an elaboration of the hard skills. Any top big data engineer should be able to:

Machine Learning;

Data APIs;

ETL tools;

Database systems;

Data warehousing solutions.

Knowing a person’s soft skills helps you to know whether they can be a good fit for your company’s culture or not. Below are some of the soft skills that you may want to consider:

Implementing Big Data Technology in business comes with a plethora of benefits. To maximize the benefits of most of the new Big Data Technologies on the market, first, identify the type of problems your business has. This helps to pick the best solution.

If possible, get help on choosing or developing appropriate software utility for managing and analyzing big data. After acquiring the most suitable technology, hire a skilled and well-trained team with Doit Software. The effectiveness of big data also relies on the competency of data engineers and data analysts.

Big Data technologies are software tools used for collecting, processing, and analyzing data. This data is usually coming from unstructured or structured large data sets that traditional technologies cannot handle.

Examples of big data include customized marketing; real-time monitoring of data; customized health plans; health monitoring; improved cybersecurity protocols; predictive analytics; discovery of consumer shopping behavior, etc.

Big data opens up several possibilities for organizations. These include enhanced operational efficiency, better customer satisfaction, higher profits, future planning, etc.

Companies are using the best Big Data Technologies and tools to create competitive products. They use the information from big data such as feedback from customers, product response, competitor successes, etc., to know what the market wants.

There are three main types of big data. These include structured data, semi-structured data, and unstructured data. The structured data is managed in a fixed format, the semi-structured data is structured but does not conform with data models of databases.

Five of the most common big data use cases include reduction of fraud; price optimization; operational efficiency; security intelligence; and recommendation engines.